You send a quick voice note through a language app. A week later, an advertisement pops up online using a voice that sounds suspiciously like yours. Same tone, same inflection. Except you never gave permission to be in an ad.

This isn’t science fiction. It’s already happening. From voice notes and selfies to casual chatbot conversations, AI apps are learning from us. In some cases, they’re using that data to generate new content, with a face or voice that looks or sounds a lot like you.

In Malaysia’s rush to embrace digital convenience, we may be giving more of ourselves than we realise. And it’s time to ask: “Are we oversharing with AI?”

How AI learns from you – and often without asking

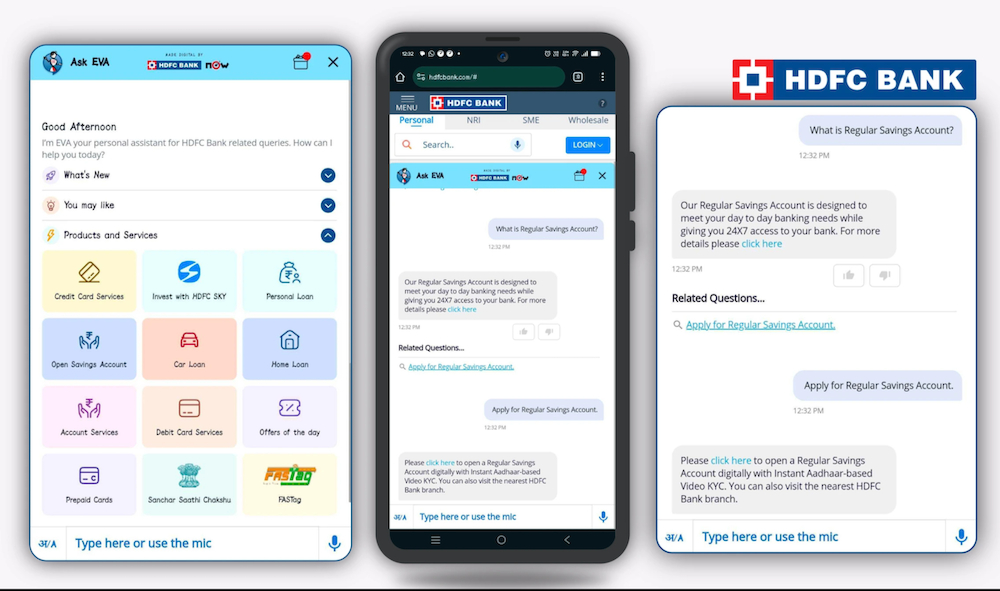

AI is everywhere. Whether you’re chatting with a bank’s virtual assistant chatbot, scrolling Shopee’s recommended deals, or experimenting with TikTok filters, some form of AI is running in the background, watching, learning and adapting to you.

“Many people don’t realise AI isn’t new,” said Prof Dr Selvakumar Manickam, Director of the Cybersecurity Research Centre at Universiti Sains Malaysia.

“It’s been part of automation, diagnostics and finance for years. What’s different now is the rise of Generative AI – tools that create new content based on data they’re trained on. Often, that includes your data.”

He explained that systems like ChatGPT aren’t intelligent in the human sense. “They don’t understand; they predict,” he said. “These models are trained on massive amounts of data from across the internet; some of it factual, some of it not. People assume the answers are correct, but they’re often just plausible-sounding patterns.”

In Malaysia, where internet penetration is high and digital habits are deeply ingrained, AI fits naturally into daily life. But very few stop to consider: “What happens to the data I just gave this app?”

Are we oversharing with AI apps?

Many AI tools today are designed to feel personal, friendly, even caring. That’s part of the problem.

We chat with bots like we would with a friend. We upload selfies to try on filters. We record voice notes without a second thought. Often, we do this without ever reading the terms and conditions.

“Malaysians, especially younger users, are digital natives,” said Selvakumar. “Their online and offline lives are deeply intertwined. Sharing personal thoughts, selfies, or voice notes feels normal. It’s part of social validation, trend culture, and convenience.”

He pointed to a 2024 report showing that Malaysians spend over eight hours a day online, one of the highest averages globally. “There’s a saying,” he added, “‘Once it’s on the internet, it’s there forever.’ That’s especially true when your data is used to train AI models.”

This sense of ease is precisely what many AI apps are built around – offering instant, visible rewards in exchange for something far less visible: your personal information.

When your data becomes someone else’s content

Today’s AI tools can replicate voices, mimic faces and even generate messages in your tone; all they need is a few samples.

Closer to home, scammers in Malaysia have begun using AI to generate convincing deepfakes of real people’s voices and faces. In one incident reported in Selangor, a woman lost RM5,000 after receiving a phone call from someone who sounded exactly like her boss. The voice was AI-generated – cloned from publicly available data or recordings.

Authorities have also flagged the rise of online scams using deepfake videos featuring the likenesses of national figures, including Prime Minister Anwar Ibrahim and the Yang di-Pertuan Agong, to promote fraudulent investment schemes.

These examples underscore how easily facial and voice data can be misused, and how quickly AI can blur the line between real and fake when personal data is up for grabs.

“People assume that by tapping ‘accept’, they’re agreeing to basic functionality,” said Selvakumar. “But those agreements often bury vague clauses like ‘data used for service improvement’. That can mean anything, including training future AI systems.”

He added, “There is no such thing as a truly free app. If you’re not paying with money, you’re probably paying with your data.”

The ethics blind spot: Who’s drawing the line?

Malaysia’s Personal Data Protection Act (PDPA) offers some protection, but not enough. It doesn’t cover things like facial recognition, voice cloning, or cross-border data training. And many popular apps aren’t even headquartered here.

Selvakumar didn’t mince words: “The current approach – burying consent in long, unreadable terms – is unethical. Consent must be clear, specific and genuinely optional.”

He advocated for ethical AI practices that go beyond compliance:

Granular, opt-in consent for each type of data

Explainable AI (XAI), so users understand how decisions are made

Data minimisation, meaning apps should only collect what they need

The right to be forgotten, allowing users to erase their data

Third-party audits to verify privacy and safety standards

“Only with transparency, accountability, and real choice can we build public trust in AI,” he said.

Dead artistes, AI clones and cultural unease

There’s another, more haunting concern: the use of AI to resurrect deceased voices.

AI-generated songs using artistes like Elvis Presley, Michael Jackson, or even the late P Ramlee, are already being shared online. Some see these as tributes; others see them as disrespectful.

“Using AI to create songs with the voices of deceased artists is a complex and controversial trend,” said Selvakumar. “Technically, it’s impressive but ethically, it’s murky. These artistes cannot grant permission, and their voices could be used in ways they’d never approve – politically, commercially, or artistically.”

He cited a case in India where an AI-generated soundtrack used the voices of dead singers, sparking public debate, even though the families were consulted.

Selvakumar supported mandatory labelling of AI-generated content, already implemented in China. “If a voice or face is AI-generated, people deserve to know,” he said.

What Malaysians can do right now

While regulation evolves, users can still protect themselves with simple, everyday steps:

Pause before you share: AI isn’t your therapist or your friend

Avoid giving apps access to your camera, mic, or contacts unless necessary

Use trusted platforms with clear privacy policies and opt-out settings

Never share sensitive data (IC number, bank details) with chatbots or filters

Educate your circle, especially teens and older relatives, about AI misuse, scams and deepfakes

“Media literacy is our best defence,” said Selvakumar. “Technology will keep evolving, but if users become more critical, more informed, they can push for better standards.”

AI is not the enemy, blind trust is

AI isn’t out to harm us. It’s just doing what it was built to do – learn from what we feed it. But when that learning happens in the background, without our full understanding or consent, the risks grow.

As Malaysia moves deeper into a digital future, we need to be just as fast in building awareness. AI can be incredibly useful, but only if it’s built on trust, transparency and respect for people’s rights.

Your voice, your face, your habits – these aren’t just data points. They are worth more than you think, so don’t give them away blindly. Before you share them with an app or a chatbot, ask yourself: Do I really know where this is going?

Not every app deserves that kind of access, and not every risk is worth the convenience.

This S.A.F.E. Internet Series is in collaboration with CelcomDigi.

The views expressed here are those of the author/contributor and do not necessarily represent the views of Malaysiakini.